AI can empower businesses to unlock new potential and quickly extract meaningful information and insights – but with those benefits, comes responsibility.

At RingCentral, we take our responsibilities very seriously and our customer’s trust is our top priority. Our proprietary and trusted third-party AI technologies are grounded in our ongoing commitment to privacy, security, and transparency, through privacy by design.

Privacy by design

Privacy shouldn’t be an afterthought. We approach privacy by design by embedding privacy principles across all aspects of our products and services that implement AI. When developing a new product or offering, our engineering team works closely with our privacy team to understand the privacy requirements from the onset. Our privacy review is an integral part of product development. We pursue transparency, privacy, and explainability by identifying applicable requirements, determining how product/AI features may impact our customers and users, assessing risks and recommending mitigations, and documenting how the AI works.

In RingSense, privacy requirements and data protection are implemented from the start by product managers and engineers working in collaboration with our privacy team. All customer data going into RingSense and all data generated by RingSense, including output and transcripts, continues to benefit from the security applied to our customer data.

Our privacy by design approach extends to the third parties that RingCentral works with. RingCentral incorporates AI assessments as a part of our vendor due diligence process, in which we ensure any vendors or third parties comply and are aligned with our approach to AI privacy.

Transparency forward

At RingCentral, trustworthy AI means protecting our customers and their data while maintaining our commitment to privacy, security, and transparency. This extends to the AI, down to the data it’s trained on, which is why we provide transparency about how our data is collected and used. We do not use customer data to train our AI models AND do not allow our third-party vendors to use our customers’ data to train the third-party’s AI models.

Our customers have access to detailed information on how AI uses their data, for which purposes and for which output, in the relevant Product Privacy Datasheets we make available for each of our services on our Trust Center. With information, guidelines, and principles for AI usage, we empower our customers to maintain trust with their employees, partners, and customers.

In our Trust Center AI tab, we also share our AI Transparency Whitepaper describing RingCentral’s approach to trustworthy AI, with the purpose of helping our customers and partners understand RingCentral’s approach to AI and how our products use and feature AI.

Partner for resilience

We prioritize relationships with our customers, and are committed to ensuring customers maintain full control in deciding how they want to use AI and how our AI interacts with their data. RingCentral empowers account owners to make informed decisions about their selection of RingCentral AI enabled systems with clear notice of AI integration and opt-in capabilities.

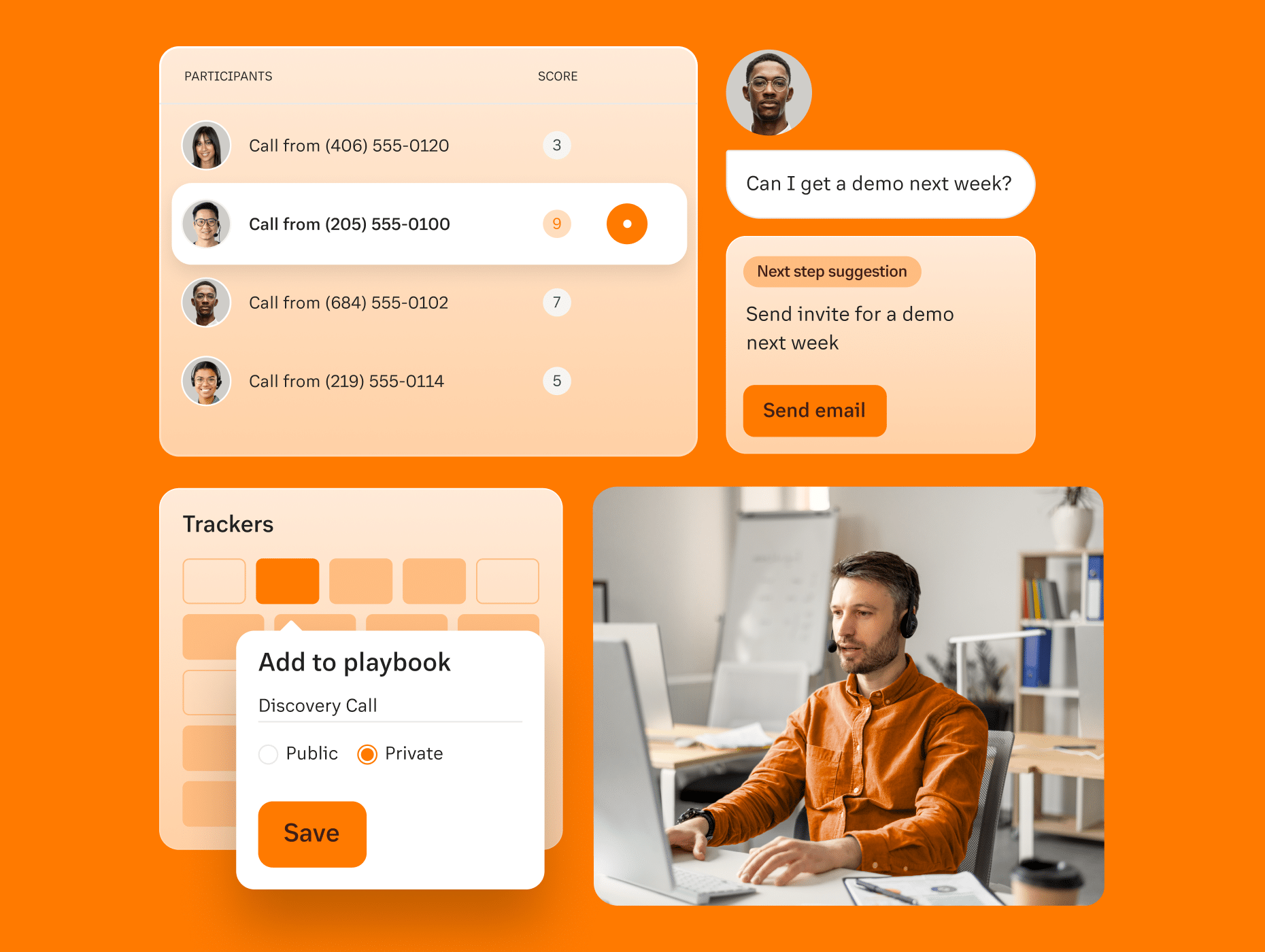

For example, our products provide a feature for transcribing calls and meetings, which requires a user to affirmatively enable transcription. Once enabled, AI is used to turn speech into text which is then turned into a transcript. Should customers choose to enable this feature, a notice of transcription to call participants is provided so end users can make an informed decision to continue participation.

Our customers have choices, with functionalities and features that are fully customizable. By enabling our customers to make decisions based on information that our AI provides, we are empowering them to drive better business outcomes.

Accurate AI & removing bias

Assessing potential bias in our AI systems is a critical component of our development process. We take into account all stakeholders involved in the use of our AI and the potential impact of our AI. The assessment of potential biases and risks are an integral part of the product review process.

Our use cases for AI involve a human-in-the-loop to evaluate the outputs of our AI systems. For example, in Smart Notes we are not monitoring the content of the notes produced, but we can infer from user actions whether the notes are accurate or not. If a user edits the notes constantly, it sends a signal to RingCentral to tweak the prompts.

We test our AI features for potential bias and undesirable outputs, and test for equality and accessibility of the tools across various demographics. Each AI feature and service is reviewed by our legal team and relevant stakeholders to assess the inclusiveness for all customers.

Our guiding principles

At RingCentral, everything we do is built on our foundation of trust. Our AI governance program guides our development of trustworthy AI to foster customer-centric innovation. We believe AI implementation is a shared responsibility with our customers, which is why we are transparent on our core principles around trustworthy AI and how we align with each:

- Safe: At RingCentral we believe AI-enabled systems should not endanger human life, property, privacy, or the environment.

- Secure: AI-enabled systems should maintain confidentiality, integrity, and availability through protection mechanisms that prevent unauthorized access and use.

- Transparent: Information about AI-enabled systems and their outputs should be available to users interacting with the systems.

- Explainable and interpretable: AI-enabled systems should enable the provision of information that describes how they function.

- Privacy enhanced: AI-enabled systems should be developed and used in compliance with privacy laws and RingCentral privacy policies.

- Fair: Development and use of AI-enabled systems at RingCentral should consider equality and equity by addressing issues such as harmful bias and discrimination.

We are proud to empower our customers to safeguard and manage AI risks by providing the resources and tools required to get the most out of AI, while protecting all stakeholders involved. With trust as our main goal, we look forward to partnering with each and every one of our customers to further the responsible and ethical use of AI.

To learn more about our approach to trustworthy AI, visit our Trust Center and download our RingCentral AI Transparency Whitepaper.

Originally published Apr 18, 2024