Contact centers carry the weight of your brand’s reputation with every call, chat, or message. Hundreds or thousands of customer interactions happen daily, yet many leaders only see a fraction of what really takes place.

Most teams still rely on outdated quality assurance methods built for a time when sampling a few calls seemed enough. It no longer is.

Supervisors, already stretched thin, try to spot problems early, but blind spots grow larger than they realize.

In this blog, we’ll look into where manual QA falls short, how AI-driven quality management gives supervisors full visibility, and how RingCentral helps contact centers stop operating with blind spots and start making every interaction count.

The hidden problem: Traditional QA can’t keep up

No team wants to believe its quality processes are failing, but the numbers prove it. Supervisors and analysts pour time into listening to calls, scoring interactions, and delivering feedback. Yet only about 1 to 2 percent of total conversations get reviewed.

That leaves more than 98 percent untouched. Hidden performance issues, small policy slips, and repeated customer frustrations spread under the radar until they escalate into churn or compliance breaches.

Manual sampling covers too little

Random call sampling worked decades ago when volume stayed low and customer expectations were simpler. Today, the scale of customer interactions makes that approach obsolete.

Even a dedicated QA team cannot keep up with the thousands of conversations flowing across phone lines, chat windows, and social channels every week.

By sampling such a small slice, supervisors base decisions on chance. Some agents handle high-risk interactions that never get reviewed.

Others get scored repeatedly for easy calls that do not test their skills.

This gap distorts performance reviews. Promotions, bonuses, and performance plans often depend on partial data that fails to capture an agent’s real work.

When feedback depends on random samples instead of complete visibility, strong performers can get overlooked.

Meanwhile, agents who struggle keep repeating the same mistakes because no one sees the consistent pattern soon enough to step in and coach effectively.

QA teams lose time while customers lose confidence

Contact centers pay a high price for manual review that rarely delivers the depth leaders expect. QA analysts and supervisors can spend entire days listening to calls, transcribing notes, and logging issues.

This work adds up quickly and keeps valuable people buried in admin tasks instead of coaching agents or addressing root causes. The more calls they try to review, the more time they lose that could go to improving performance in the moment.

While QA teams get stuck on repetitive reviews, the customer impact grows in the background. Small problems compound. Trends that frustrate callers linger because no one spots them soon enough to resolve them at the source.

When agents keep repeating the same missteps and supervisors stay buried in manual tasks, customers feel the difference — they repeat themselves, get inconsistent answers, and lose confidence in the service.

Escalations increase, negative feedback spreads, and the cost to repair reputation damage later far outweighs the effort it would have taken to fix the problem early with better visibility.

How RingCentral AI Quality Management closes the gaps manual QA leaves behind

Most contact centers want deeper insight into agent performance and customer experience, but face the same limits: too many conversations, too little time, and not enough resources to review them all.

RingCentral AI Quality Management tackles these issues by doing what manual QA simply cannot — scanning every interaction, surfacing patterns early, and giving supervisors clear, consistent information they can act on.

This shifts quality management from a random sampling exercise to a reliable, day-to-day workflow that spots recurring problems, closes gaps faster, and supports fair agent coaching.

Here’s how RingCentral makes this level of visibility and action possible:

100% coverage without adding more overhead

RingCentral’s AI reviews every voice, chat, and digital interaction automatically. No interaction falls through the cracks if a customer voices the same issue again and again.

This 100% coverage cuts down surprises. Patterns like repeated script deviations, policy misses, or signs of non-compliance come to light before they become big headaches. Leaders see exactly what needs to change, without spending hours sorting through recordings by hand.

Lighter post-call work for agents

Anyone working a contact center floor knows how much time disappears after the call ends. Transcripts, summaries, action items — these tasks drain energy and often feel repetitive. RingCentral’s AI handles this admin automatically. Calls get documented accurately and follow-ups stay clear.

With that burden lifted, agents put more energy into helping the next customer instead of retyping what already happened. The benefit extends to the customer too. Accurate notes mean the next agent picks up with zero confusion. Fewer mistakes, less repeat explaining, better service.

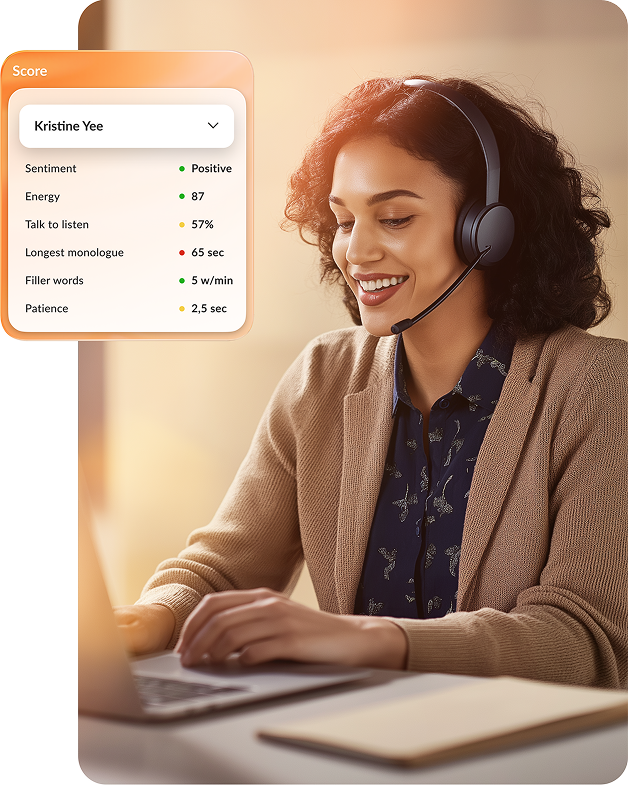

Scoring that stays fair and consistent

When reviews rely on scattered samples, performance scoring can feel arbitrary. One agent might get flagged for a tone slip that goes unnoticed with a different agent. RingCentral’s automated scoring applies the same standards across all interactions. Every agent knows where they stand based on a broad, balanced view.

This consistency keeps coaching grounded in facts. Supervisors don’t waste time defending scores or smoothing over disputes. Instead, they bring concrete examples to one-on-ones, making each session practical. Agents see exactly what to adjust, and what’s working well, which helps them improve faster.

Clearer feedback and more helpful oversight

Quality reviews often stall when supervisors spend too much time hunting for “good examples.” RingCentral’s AI Quality Management flags calls that stand out for strong performance or recurring missteps. Managers spend less time searching and more time addressing what actually affects outcomes.

If multiple managers oversee an agent, the system’s multi-manager access ensures no piece of feedback gets lost. Dotted-line managers, team leads, or quality coaches all work with the same evidence. That means agents get more useful input that connects across teams — not conflicting advice from people looking at different data.

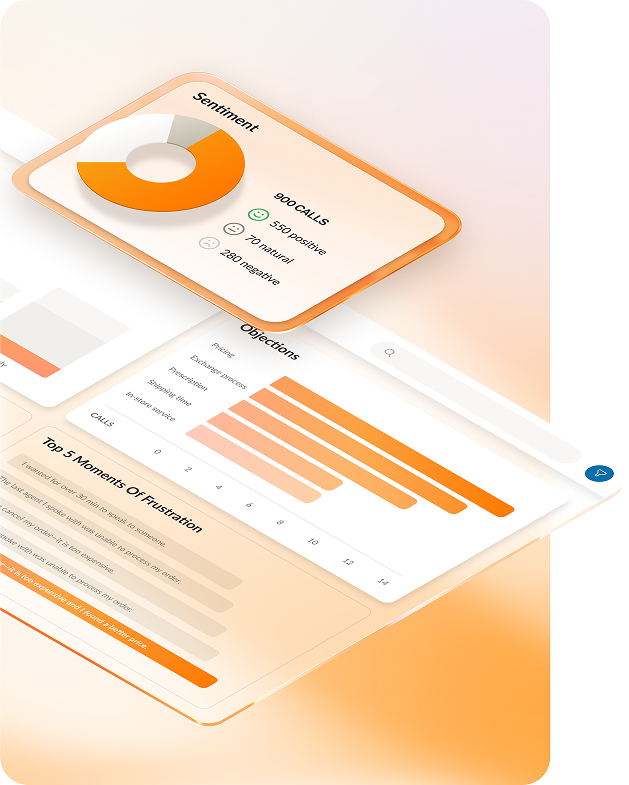

Tracks patterns customers feel first

Customer sentiment shifts and repeated complaints can hide in everyday interactions. Unless someone listens for them, they spread quietly. RingCentral’s AI looks for keywords, repeated objections, and competitor mentions that signal changing customer needs or growing frustration.

Supervisors get a clear signal when certain topics spike, so they can adjust scripts or workflows before those issues show up in negative reviews or lost customers. These trends help teams stay ahead of problems instead of chasing complaints once damage is done.

Works across channels, not just calls

Modern customers don’t stick to phone calls alone. One might start with chat, shift to email, then escalate to a call if they don’t get what they need. RingCentral’s AI covers all of it in a single system. That means no gaps where trends or mistakes slip by unnoticed because they happened outside the phone queue.

Smarter scorecard calibration is another bonus. Writing good, fair scoring criteria can eat up days of manager time. RingCentral’s AI helps tighten questions, removes vague language, and flags areas that no longer align with business goals. Teams keep scorecards clear and useful without reinventing them from scratch every few months.

Makes supervisors’ workday more focused

Supervisors already juggle more than scoring and sampling. Scheduling, escalations, and coaching take up every spare minute. RingCentral’s AI Quality Management points them straight to what needs attention instead of drowning them in raw transcripts. Automated playback links directly to the moment a score or flag came from, so no one wastes time piecing together context.

This focus means supervisors spend more time developing agents and fixing issues early. Less time gets lost sorting through mountains of unstructured data. Better decisions happen because the right details come forward when they matter — and quality management becomes a daily habit, not an afterthought.

Makes agent training more meaningful

While it’s important that agents receive regular training, it is often based on that 1 to 2 percent of calls reviewed, or – maybe even worse – a generic training assigned for all agents. Without customized, targeted training, agent satisfaction decreases, and the efficacy of the training wanes.

AI Quality Management goes beyond raw insights to provide actionable data. It can summarize each agent’s strengths and opportunities based on every interaction. With a click, supervisors can instantly generate and share customized training plans for each agent. This makes the training meaningful and relevant for the agent, boosting their performance.

The QA bottleneck is over – if you embrace AI

Contact centers that still rely on manual sampling alone take unnecessary risks every day. Customers expect consistency across every interaction, yet most teams still review only a fraction of calls. That mismatch costs trust, time, and valuable insight.

Supervisors do not need more static reports or random spot checks. They need a system that shows exactly where to focus before small issues grow.

This shift to AI turns QA from an occasional spot-check into a practical, day-to-day tool for improving service quality and agent performance — even as volume and expectations climb.

Ready to unlock the power of AI? Discover what’s possible with RingCentral AI Quality Management today.

Originally published Jul 11, 2025