Category

RingCentral newsdesk, RingCentral products

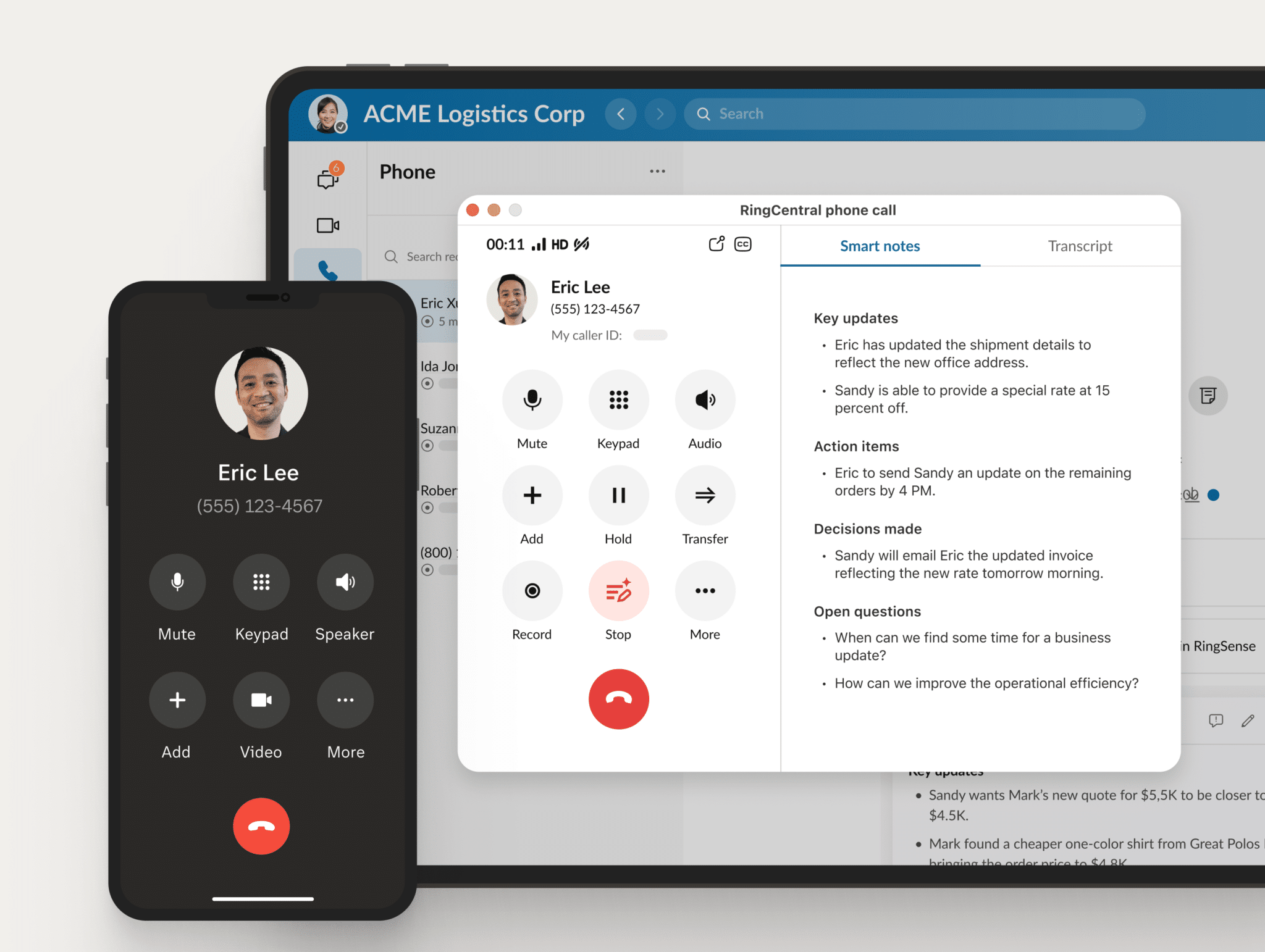

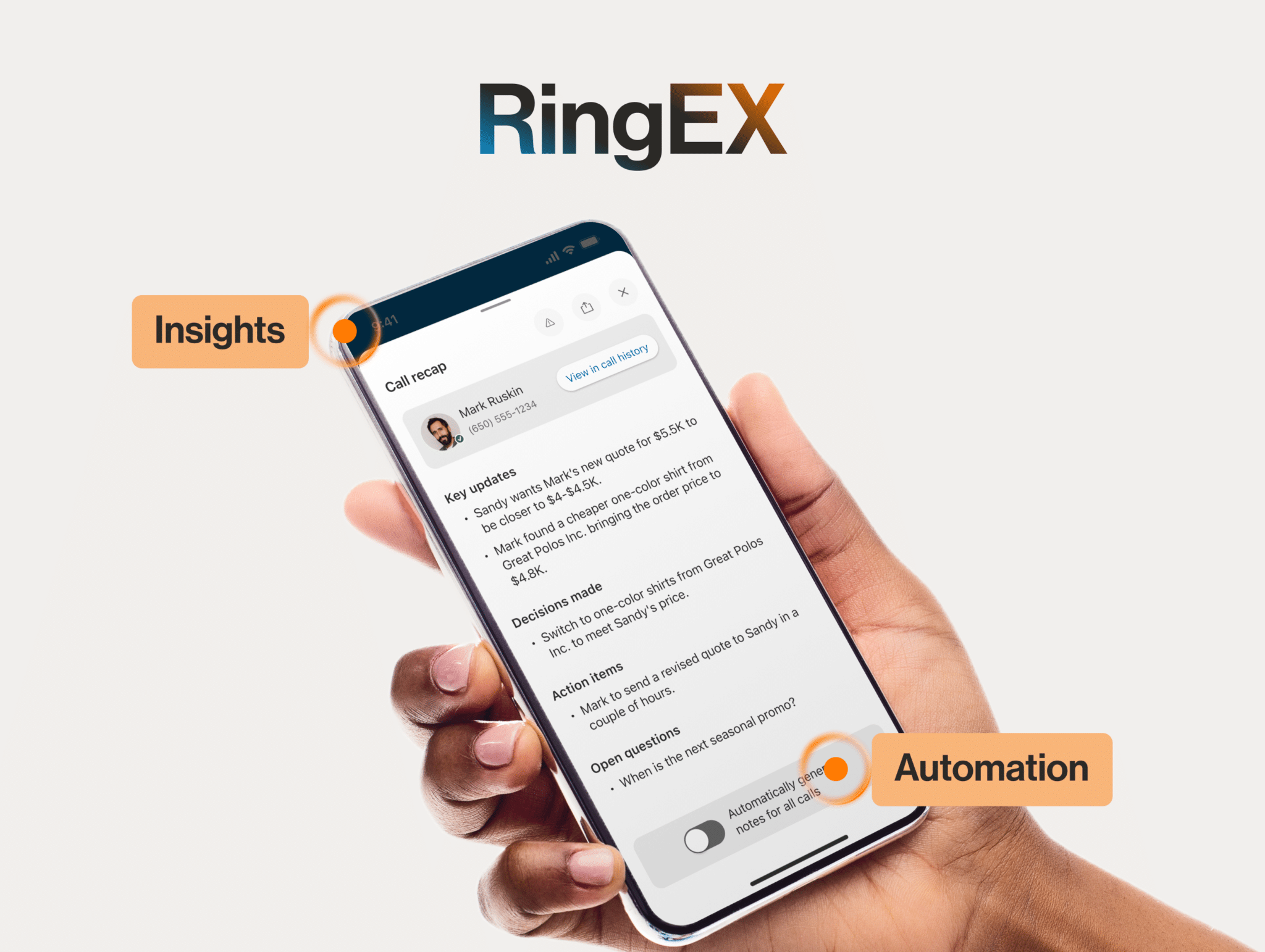

RingCentral Reimagines the Business Phone System with Real-Time AI

A new era of AI at work In today’s dynamic work landscape, integrated collaboration and customer experiences are the cornerstones for businesses to provide a ...

Industry highlights

6 financial services trends to watch in 2023

A new year means it’s time to look ahead at financial services trends on the rise. Global inflation was the dominant story in the financial sector throughout 2022. In 2023, businesses and ...

Startup advice: Quotes from 7 famous entrepreneurs

If you’re looking for solid startup advice, it can be hard to find tips to trust. The startup world is still relatively new, despite the recent explosion of these small-yet-mighty companies. In a ...

6 healthcare trends to watch in 2023

‘Tis the season to look ahead at healthcare trends for the year! As you know, the healthcare sector has gone through massive evolution in the last few years. The global pandemic provided a ...

The best property management software (& other useful ...

Working in real estate, you’ve got to be prepared for a fast-paced work environment and able to think on your feet. Making deals happen takes a lot of groundwork. Meeting clients, checking in ...

7 retail call center best practices

Highlights The following best practices for retail call centers are discussed: Establish key performance indicators to evaluate and improve results Continuously coach reps to pursue individual ...

10 simple student voice strategies

Highlights Students are the foundation of your school, but often, their voices are underrepresented. Supporting student voice leads to more student engagement, and ultimately to better school ...